The world of IT operations is evolving at breakneck speed. Traditional ITOps (IT Operations) tools are struggling to keep up with the demands of modern infrastructure, which is increasingly dynamic, distributed, and complex. Enter AIOpsa game-changing approach that leverages artificial intelligence to streamline operations, improve efficiency, and reduce downtime. One of the most promising platforms in this space is KeepHQ, an open-source AIOps and alert management solution.

In this blog, well explore why AIOps is becoming the future of IT operations and how KeepHQ can replace legacy ITOps applications.

The Limitations of Legacy ITOps

Traditional ITOps tools were built for an era where static infrastructure and monolithic applications were the norm. Today, these tools face several challenges:

- Alert Fatigue: Too many alerts from disparate tools overwhelm teams.

- Lack of Context: Legacy systems often lack the intelligence to correlate alerts and identify root causes.

- Manual Processes: Time-consuming, manual workflows lead to slower incident resolution.

- Scalability Issues: Struggles to handle the complexity of modern cloud-native environments.

With these limitations, organizations are seeking smarter, more agile solutionsand AIOps is stepping up to fill the gap.

What is AIOps?

AIOps (Artificial Intelligence for IT Operations) combines machine learning, big data, and automation to:

- Analyze vast amounts of IT operations data in real-time.

- Detect patterns and anomalies.

- Automate repetitive tasks.

- Provide actionable insights for proactive problem resolution.

The goal of AIOps is simple: to make IT operations smarter, faster, and more efficient.

Why KeepHQ?

KeepHQ is an open-source AIOps platform that excels in alert management and incident response. Its designed to address the key pain points of traditional ITOps while being flexible and cost-effective. Heres why you should consider replacing your legacy ITOps application with KeepHQ:

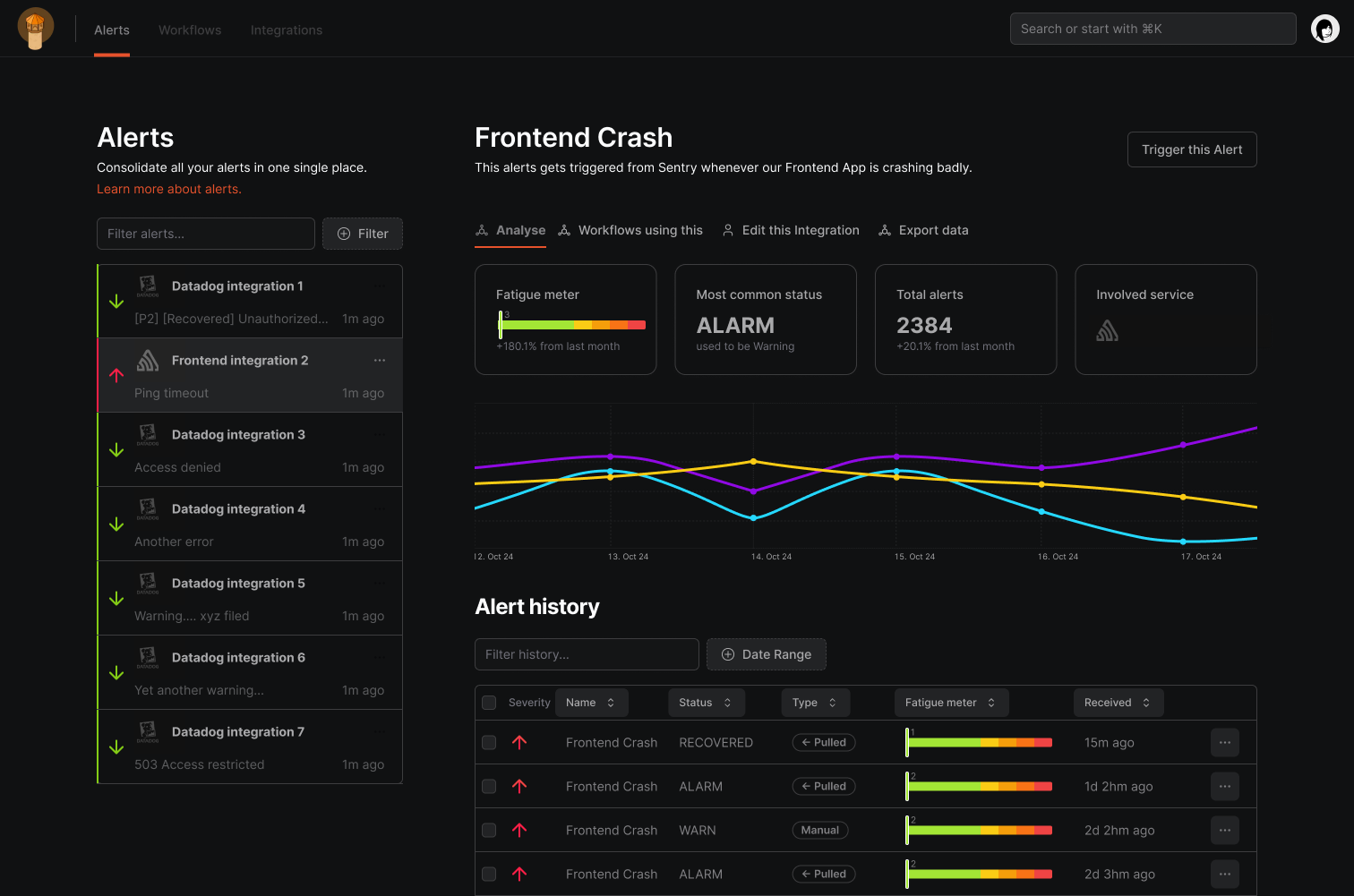

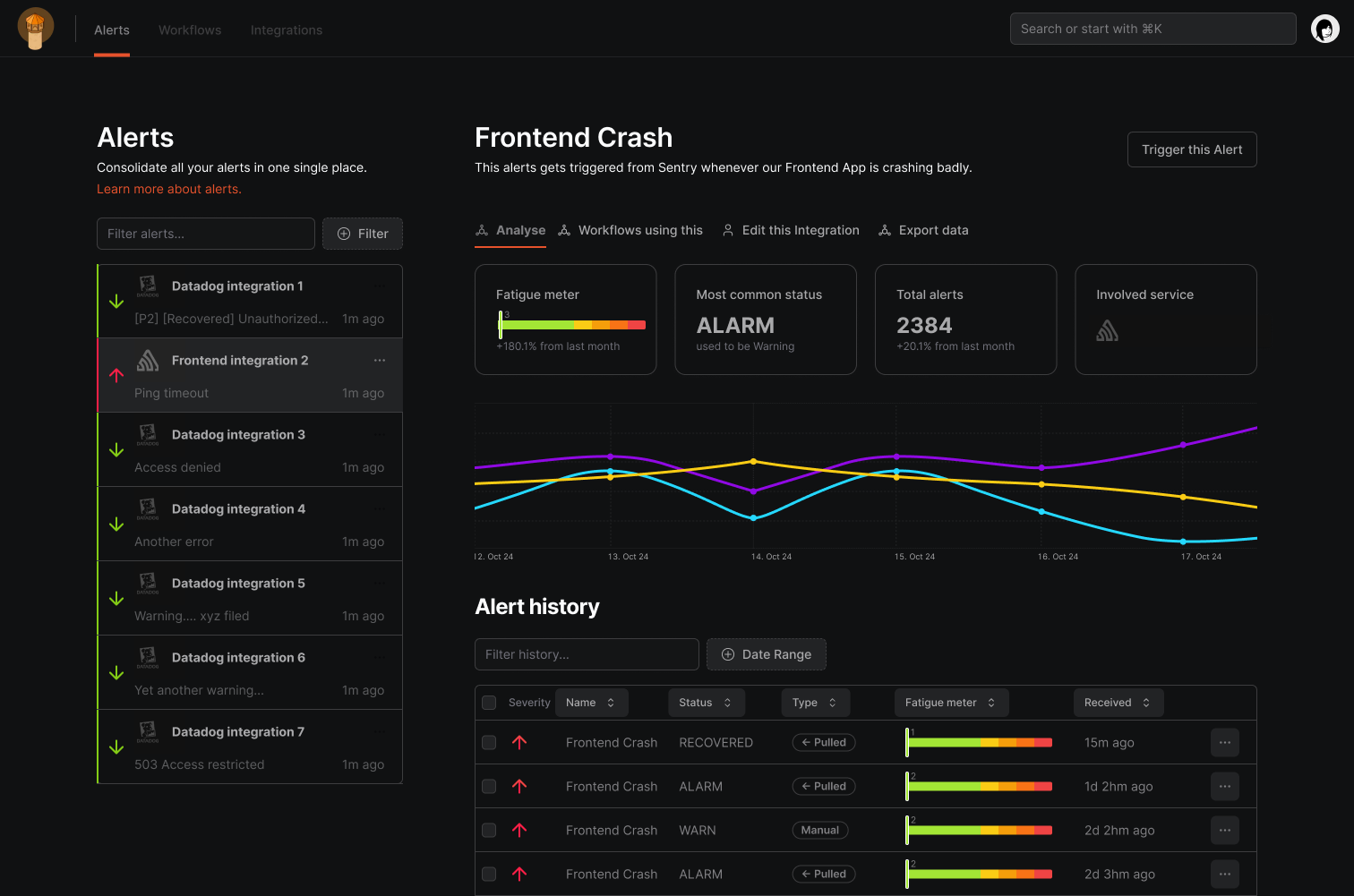

1. Centralized Alert Management

KeepHQ consolidates alerts from multiple monitoring tools into a single, unified dashboard. Say goodbye to juggling multiple systems and hello to streamlined operations.

2. AI-Driven Insights

KeepHQ uses machine learning to group related alerts, identify patterns, and reduce noise. This means fewer distractions and more focus on critical issues.

3. Customizable Workflows

Unlike rigid legacy tools, KeepHQ allows you to define custom workflows for escalations and automated responses. Adapt the platform to your teams unique needs.

4. Open-Source Advantage

KeepHQs open-source nature means lower costs and greater flexibility. Youre not locked into proprietary solutions, and you can contribute to its ongoing development.

5. Seamless Integrations

Integrate KeepHQ with your existing tools like Prometheus, Grafana, Slack, and more. Its designed to fit seamlessly into your tech stack.

Benefits of AIOps with KeepHQ

Adopting AIOps with KeepHQ can transform your IT operations in the following ways:

- Improved Productivity: AI-powered noise reduction and incident correlation save valuable time.

- Enhanced System Reliability: Proactively detect and resolve issues before they impact users.

- Cost Efficiency: Open-source KeepHQ eliminates expensive licensing fees.

- Future-Proofing: Prepare your operations for the complexities of tomorrows infrastructure.

Is AIOps the Future?

The answer is a resounding yes. As IT environments grow more complex, traditional tools simply cant keep pace. AIOps is the natural evolution of IT operations, bringing intelligence, automation, and agility to the forefront. KeepHQ, as an open-source AIOps platform, is an excellent choice for organizations looking to modernize their operations while keeping costs under control.

Ready to Make the Switch?

Replacing your legacy ITOps application with KeepHQ is more than just a technology upgradeits a strategic move toward a smarter, more efficient future. With its AI-driven capabilities and open-source flexibility, KeepHQ empowers teams to stay ahead in an ever-changing landscape.

Explore KeepHQ today and embrace the future of IT operations.